There’s been a lot in the press and on social media in recent months about AI for speech. It seems artificial intelligence, might offer benefits for many people with communication impairments. It was a chance remark that made me realise AI for speech has been present in my life for years as it’s integral to work prediction. Then AI has become integrated into AAC voices. The latest is how it can help people with dysarthria or voice loss and on the horizon are other new advances.

AI for speech dysarthria

Well, that’s my diagnosis so over 2 years ago I decided to take part in a trial of voice recognition, also called speech assistance, software. The idea is AI would allow my voice to be translated from something unintelligible to many people into spontaneously recognisable speech. This supposedly could then be used for a variety of things. This included everyday conversation, speech-to-text for written work and giving commands to my home environmental control system.

Will AI for speech help people like me?

The thought of this was exciting. I could see some real benefits as a student. For instance: for spontaneity in a lecture. Currently, if I want to contribute I rely on my assistant to repeat verbatim what I say. When I’m writing, whether it’s an email or a 10,000-word piece of assessed work then the process is laborious. My PA scribes my spoken speech which I may have to repeat several times or they wait whilst I input on my communication device. And, if I wanted to give commands to my home environmental system I could do it without having my tech by my side.

Trialling the set-up of AI for speech

Initially, it was a little daunting to find I would need to repeat words 30 times consistently to programme the software. However, I decided to give it a go. Sadly, my dysarthria means my voice is very inconsistent. In any verbal utterance from a phrase to a longer sentence, my output can be slurred or I can sound hoarse. The speed at which I speak can vary from a rushing train to a slithering snail. Then alongside all this, I can one second appear to whisper and the next I experience an uncontrolled explosion of power. All of this is completely outside of my control.

Why this type of AI for speech didn’t work for me (this time)

My particular form of dysarthria, caused by my cerebral palsy, affects all the muscles of my face, jaw, tongue and throat. Especially when I try to control my output and be as clear as possible in my speech. The result is I regularly experience physical speech fatigue, and this results in having to stop speaking. This means I use AAC every day with people who don’t know me well, and out of context for conversations with my team and team.

Following several attempts at programming the software I spent considerable time reflecting on the process. This involved considering the software, my access and my communication needs and regretfully I decided it was not for me. The best way moving forward is still for me to use my communication aid.

Thank goodness I have a fallback with AAC

I’m maybe lucky compared to others with dysarthria. I have access to AAC and this means I also have easy access to my home environmental controls through apps. I can draw curtains, open doors, do the lights and sort my varied media needs at the touch of an icon. My physical disability means I’ve got my lovely personal assistants 24/7 to facilitate anything else I need.

Having an effective method of communication is important. Much as I loved the thought of AI for speech I know now I would prefer to keep my voice for interaction with those I know well. I am already able to access all my Apple devices with my fingers or a stylus and have everything I need programmed.

The other thing that I really didn’t like was the software couldn’t take account of the explosive nature of my speech. On several occasions, the software gave me feedback telling me not to shout, something that I cannot change. It was actually a bit demoralising when I was trying really hard to bring clarity to my speech so the software would work.

I can clearly see the benefits for some people of having this type of software. It will make a real difference for some, especially if they do not have access to AAC. Anything that can improve communication has to be a good thing, sadly this particular AI for speech development is not for me.

AI for AAC voices

Until I was 15 I was an American child, decent British AAC voices just didn’t exist. At 15 I became Lucy, an Acapela British voice, and she is still with me on my iPad. I understand Lucy took 3 months to make in a lab, ultimately providing a consistent high-quality voice. From an identity perspective, it’s really important to be able to like your own voice.

These developments are really exciting. Whilst I identify with Lucy what I find challenging and frustrating, is being in a room of other female AAC users talking in my voice. And, of course, all the males sound the same too. In real life, everyone has their own unique own voice, so I see AI as a great way forward for AAC personalisation and developing identity.

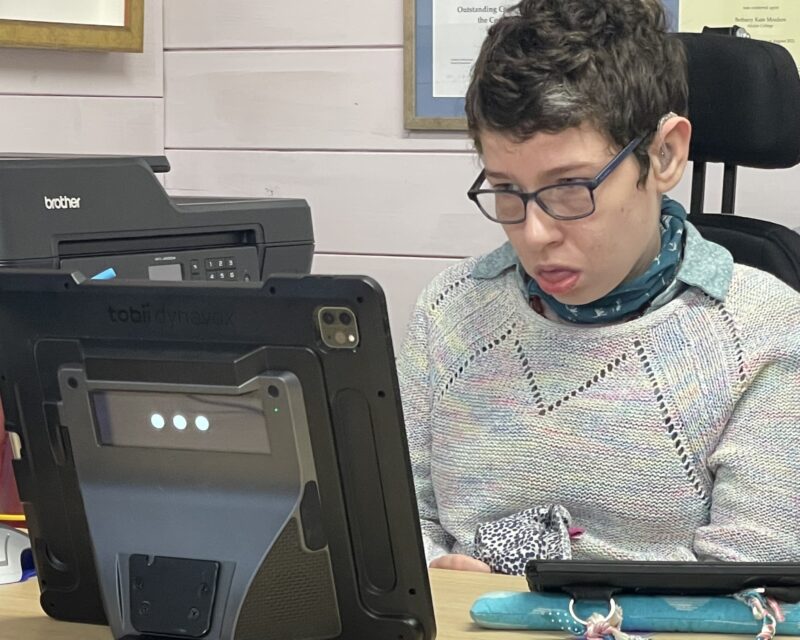

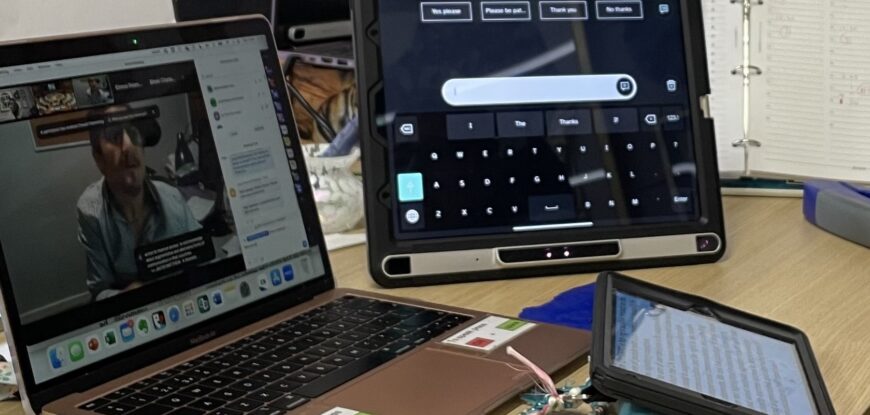

Acapela now allows users to create their own voices using AI neural digital software. Recently I recorded a new voice (my Mum) which I’ve been testing on the TD Pilot (the Tobii Dynavox eye gaze system combined with an iPad Pro). The new version is still in development so I’m waiting on further updates. The quality is good but not as good yet as Lucy’s. However, I recognise recording only 50 phrases to generate a new voice is pretty amazing too.

AI for speech prediction

For many years AI has driven developments for AAC users. (Although let us not forget that AAC was the driver for predictive texting). The use of natural selection or prediction is invaluable in speeding up communication. I remember getting the Lightwriter SL40 Connect 14 years ago and this used natural selection. Suddenly I was being offered the words I would normally use as opposed to what had been decided by the software developer. This was in the context of what I had already written, not just prediction based on spelling.

For many years AI has driven developments for AAC users. (Although let us not forget that AAC was the driver for predictive texting). The use of natural selection or prediction is invaluable in speeding up communication. I remember getting the Lightwriter SL40 Connect 14 years ago and this used natural selection. Suddenly I was being offered the words I would normally use as opposed to what had been decided by the software developer. This was in the context of what I had already written, not just prediction based on spelling.

Again, that’s something I love about using Proloquo4Text on my iPad and other Apple devices. The word prediction works from the history of what I’ve said before. The good news was because it interfaces with Acapela I was able to retain Lucy

More recently I’ve started using the TD Pilot for spontaneous conversation. I love both the ease of using it with my eyes but also how the AI for speech prediction works. It’s not just words I might choose but also phrases. The only downside at the present time is there is limited storage for regularly used output. For instance, if someone asks me about my MRes I have a standard response on my iPad. At the present time, I can’t use the prediction feature on the TD Pilot to give a fuller answer. Currently, the prediction will only give me the first few phrases of that response. I am, however, hopeful that with further development work that will change.

Might AI for speech put words in my mouth?

I am a little wary of AI developments for speaking. What I 100% don’t want is words being put into my mouth. But I guess that is my/the user’s choice to not accept what is being offered unless it is exactly what we want to say.

AI and brainwave communication

A few years ago, at the ISAAC conference in Lisbon, I had the opportunity to try out brain wave technology. It was nowhere near ready for use but making a mouse quiver on the screen showed that maybe one day this was a potential option. However, it is hardly practical to wear a cap with gel in the hair for the electrodes on a day-to-day basis.

I don’t adhere to the medical model of disability. I am not broken and I don’t want to be fixed. My disability is something I’ve lived with all my life. My CP, speech and hearing impairments are what make me, me! I understand why some people have deep brain stimulation for dystonia. And, I guess I might consider it on health grounds if it t would improve my quality of life. Every surgery, particularly to the brain carries risk. I cannot see at this stage the risk of having an implant to control a computer and generate speech warrants this over what I already have. Besides which I am a visual thinker (images, symbols, colour) so would it work for me as I don’t think in words? And, how would it work for a developing child to have access to language? I’m pretty sure no implant will last a lifetime.

The future for communication and AI

It seems that all development focus by the technology giants is solely on those that have a voice, including voice banking for those who will lose theirs. For those who are unintelligible, we need to fight hard to keep our needs top of mind for developers. And, this isn’t just for communication but for all technology using AI. If creators can make their work accessible for those of us with complex disabilities it will only benefit everyone.

Software conflicts

Oh, and a word of caution. I’ve experienced issues around my daily reliance on great tech. Mainly surrounding compatibility with the other tech. For example, a new hearing aid app to control volume switched off the output on my AAC device and only sent the spoken message to my hearing aids. The immediate result was the app had to go. Then, the Bluetooth speaker to amplify my output when I deliver a presentation turns off the stylus I use to input speech on my iPad. So, it’s good that things are moving on, but not if I cannot output the words. What sounds great in principle can be challenging in practice. But what would be amazing was if there was the capacity to use AI to recognise all the software and hardware those who are tech-reliant use. Then, make sure everything will work seamlessly together.

AI and tech as enablers

Without doubt, technology is a great enabler. Yet, there are challenges surrounding the very small size of a potential market, such as AAC. I love that companies like Microsoft are making their products more accessible to everyone. Apple has brought communication software for those that can use generic packages within easier reach. My TD Pilot combines an iPad Pro and the Tobii Dynavox hardware but wasn’t cheap. However, at the specialist end of communication, those with disabilities will probably continue to pick up the heavier cost of developing suitable software and hardware. Voice recognition software is a case in point. Despite the fanfare for something enabling there is always a spectrum of potential users. Sadly, there will always be people like me who cannot access it effectively.

Within the AAC market, each individual is unique, and every device needs an element of customisation. We do not all use the same hardware or software even because our needs are different. I know very few AAC users who use their devices in exactly the same way. This varies from input methods to customisation of the software, to the language needed. Personalisation is the key to success. Might the versatility of AI for speech mean personalisation becomes the new norm? I hope so! Just like being able to record your own voice quickly and relatively cost-effectively. Who knows? I for one will be avidly waiting to see what happens next.

PS. I use Grammarly when I’m blogging which combines AI with the rules of writing. Basically, I write and then use it to check my spelling and punctuation. It doesn’t always like ‘English’ and puts in far more commas than I use so I override the suggestions if needed.

If you found this interesting or

helpful please feel free to share.